API Traffic Throttling

Objective

The following page explains what is API Traffic Throttling and how it works when using SOAJS as well as how throttling can be configured per environment, per tenant and per IP address.

The page also shows how you can set default throttling strategies per environment and then override these defaults at the tenant level.

Terminologies

API Traffic Throttling refers to the rate limiting policy applied by the API Gateway on the number of arriving requests. The algorithm depicts how many requests should be handled within a window timeframe and how to queue or reject the requests that exceed the maximum limit.

The following terms are used by the throttling algorithm:

| Name | Definition |

|---|---|

| Type | The type of throttling used by SOAJS can either be applied:

|

| Window | Serves as an interval. During this interval, requests are treated if the total number of requests has not yet reached the limit. If the number has reached the limit, requests are either queued or rejected (read more in below sections). |

| Limit | Represents the maximum number of requests that can be handled within a window timeframe. |

| Retries | Instructs the throttling capability to queue requests |

| Delay | Defines the time period to wait between retries |

When requests are queued, the response returned contains the following headers:

| Name | Definition |

|---|---|

| X-Ratelimit-Remaining | Represents the amount of available quota within the limit configured |

| X-Ratelimit-Limit | Represents the maximum available requests limit per window timeframe |

| X-Ratelimit-Reset | Represents the remaining time in milliseconds until this window closes and a new window starts |

Architecture

The throttling algorithm is created on demand when the first request is received for a specific tenant. This even fixes the start time of the window. All consecutive requests consume quota from this window until the time expires. When the quota is exhausted, one of two things happen:

- Requests are rejected

- Requests are queued

When the window time closes, the quota is reset and a new window starts with the same time frame. This is why when multiple strategies are configured, each strategy must have a window time limit, a limit quota for this window, the total number of retries and delay period between retries.

Because throttling is intended to smooth spikes, over quota requests get delayed and re-tried after a delay period.

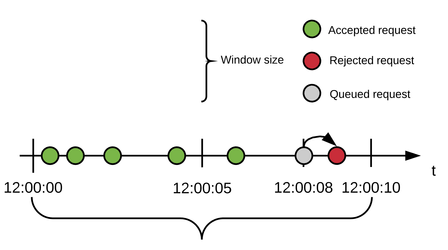

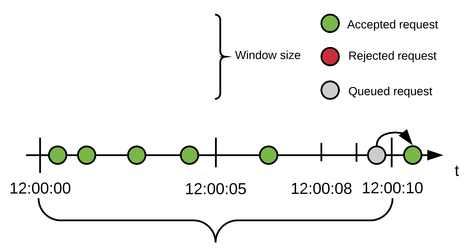

Consider you configure a strategy to have: 10 seconds window, 5 requests, 2 retries with 500 ms delay.

The below tables demonstrate how some requests are rejected and how some get queued, re-tried after a delay period and accepted.

| Rejected Request |

|---|

5 requests arrive within the first seconds of the window time frame. At 8 seconds, a new request comes in. Because the quota has been exhausted, the request is queued and delayed for 500ms as configured. At 8.5 seconds, the queued request is re-tried but since the window time frame has not closed yet and no more quota is available, the request is queued again for another 500ms. At 9 seconds, the queued request is re-tried and again, since the window time frame has not closed yet and no more quota is available, and because no more retries are allowed, the request is rejected. |

| Queued & Accepted Request |

|---|

5 requests arrive within the first seconds of the window time frame. At 9.7 seconds, a new request comes in. Because the quota has been exhausted, the request is queued and delayed for 500ms as configured. At 10 seconds, the window closes, and a new window starts with the same time frame value, in addition, the quota resets back to 5. At 10.2 seconds, the queued request is re-tried. This time, there is an available quota to handle the request; the request is accepted. |

API Traffic Throttling is handled natively by the API Gateway. The gateway pulls the environment registry configuration and caches it in memory, then when requests arrive, it pulls the configuration from the memory and uses it to evaluate these requests.

This allows the Gateway to operate in a light mode, avoiding overhead calls to the database when evaluating requests and determining if they should be redirected to your microservice or reject them.

If the environment where the gateway is running consist of only one machine, then the throttling strategy will execute exactly as elaborated in the above diagrams.

But SOAJS empowers distributed architecture and supports deploying multiple instances for the same microservice when using Single Cloud Clustering with either container technology or virtual machine layers as infrastructure.

This means that when using single cloud clustering and deploying several instances of the SOAJS API Gateway, the registry configuration is cached in each instance and of course the throttling strategy with it.

This architecture impacts heavily on how you should configure your throttling strategy because, at this point, the number of gateway instances impacts the rate limit.

Consider you configure a strategy to have: 10 seconds window, 5 requests, 1 retry with 500 ms delay AND this time, you have 2 instances of the SOAJS API Gateway deployed in this environment.

The result of this configured strategy becomes:

| SOAJS API GATEWAY INSTANCE 1 | 10 seconds window, 5 requests, 1 retry with 500 ms |

|---|---|

| SOAJS API GATEWAY INSTANCE 2 | 10 seconds window, 5 requests, 1 retry with 500 ms |

| Total Throttling outcome | 10 seconds window, ( 2 x 5 ) = 10 requests, 1 retry with 500 ms |

As you can see the limit was multiplied by the number of instances deployed and instead of being 5 it's now 10. The remaining attributes of the strategy do not get modified as their purpose is not related to the number of instances, only the limit is impacted.

So be careful when configuring your throttling limit and consider the number of instances running.

Per Tenant vs Per Tenant & Per IP Address

SOAJS also supports configuring throttling strategies per tenant or per tenant and per IP address.

When a request arrives at the SOAJS API Gateway, the gateway analyzes the request and loads the microservice configuration accordingly.

If the microservice states that it supports multitenant, the Gateway then retrieves the tenant key from the headers of the request, analyzes it and if the tenant is allowed to access this microservice, the request is accepted and throttling takes place.

If either the key is invalid or not supplied, the gateway rejects the requests and throttling does not occur.

Click on these links if you are not familiar with SOAJS Multitenancy, Key security or Access levels.

Public vs Private APIs

In addition to the above, SOAJS adds extra intelligence allowing you to create multiple strategies and decided which are applied on APIs that can be accessed without supplying an oAuh Access Token ( Public APIs ) and which are applied on APIs that require you provide the oAuth Access Token of a logged in user ( Private APIs ).

Basically private APIs require that you first log in to SOAJS oAuth Microservice, grab the access token returned add it to the request then make the request to the API along with the tenant key whereas public APIs do not need an access token to be present in the request.

Click on these links if you are not familiar with SOAJS oAuth Microservice or Public / Private APIs.

Configuring Throttling per Environment

The SOAJS Console provides the ability to create & manage multiple throttling strategies as well as assign default strategies to use when invoking Public APIs and Private APIs.

The interface is located under Deploy → Registries UI Module and offers a ready to use wizard that guides you each step of the way.

Overriding Throttling at the Tenant level

Coming Soon